GraphNEx at the CHIST-ERA Projects Seminar 2024

Which time is it?

I’m not sure in which time zone I am!

- Olena -

On the waves of the sea between Helsinki and Stockholm, the wind on the 12th floor terrace, the Scandinavian islands, friendly talks, meeting with other researchers and learning about the outcomes of a full year of research in different topics and projects. All of this was part of the annual event organised this year by CHIST-ERA on the cruise Silja Symphony, aiming to bring researchers together as well as connecting with representatives from the research funding bodies. The cruise departed from Helsinki on April 16th, travelled to Stockholm (Sweden), and returned back to Helsinki on April 18th.

Alessio, Olena, and Myriam - three of our team members representing GraphNEx - boarded the cruise with all other researchers involved with CHIST-ERA. On the first day, members of all research projects were split into parallel sessions, each for the corresponding CHIST-ERA call. GraphNEx is part of the Call 2019 on Explainable Machine Learning-based Artificial Intelligence (XAI). As the other projects in the same call, GraphNEx is towards the end after 3 years of hard work and research collaborations. Representatives from each XAI project were excited to present the outcomes and achievements from their corresponding consortium.

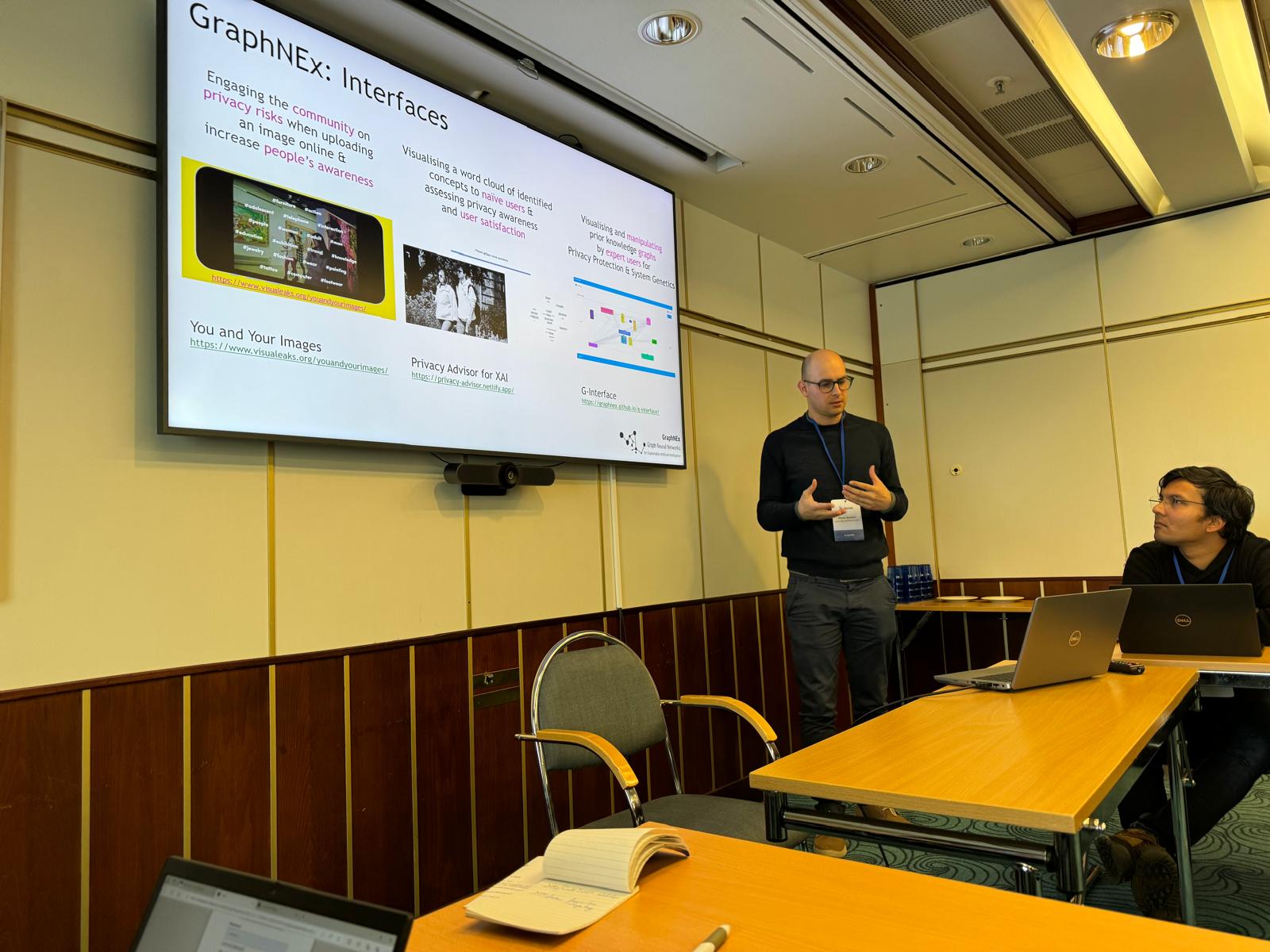

GraphNEx presentation. Alessio presented GraphNEx in a nutshell, the overall framework and its application to the two use-cases of System Genetics and Privacy Protection. Over the last 3 years, GraphNEx has contributed with theoretical methods related to graph machine learning, and methods and benchmarks applied to the use cases. GraphNEx also developed and made available various interfaces:

- You and Your Images for engaging the community on privacy risks when uploading an image online and increasing people’s awareness;

- Privacy Advisor for XAI for visualising a word cloud of identified concepts to naive users and assessing privacy awareness and user satisfaction; and

- G-Interface for visualising and manipulating prior knowledge graphs by expert users for Privacy Protection and System Genetics. He answered the questions from the representatives from the other projects.

As the cruise was reaching Stockholm on the morning of the second day, representatives of the projects in the XAI call still had time to collaborate and prepare a common presentation of the XAI topic as in previous years of the event. The presentation highlighted major achievements and outputs of the projects, and covered challenges in terms of exploitation of the results and long-term vision, and future directions. This session helped the representatives build connections between different projects, allowing to map different instances of the same idea into concepts shared across the projects.

Stockholm. Just before reaching Stockholm, Olena was shocked by “Seeing two clocks with Finnish and Swedish time and not knowing which one applies to you at that moment was fun and memorable!”. Once arrived in Stockholm, our team members and all other researchers could enjoy some free time to explore the city and taste some of the traditional food in the local places. As they visited around, especially the royal palace, Olena, Myriam, and Alessio were asking themselves "Where are the gardens? Can we ask the guard? Noooo..!”. It turned out that oh yes, you can! The guard was listening to them and he answered.

The cruise departed from Stockholm to return to Helsinki the morning after. The researchers attending the event gathered to present the common presentations from the calls of each year and discuss the future of open review and open access research, and the future of CHIST-ERA. Before arriving back in Helsinki, the researchers could enjoy a dinner together followed by a relaxing moment of music in the main entertaining area of the cruise, while discussing possible future ideas and collaborations.

Conclusion. The 2024 CHIST-ERA Projects Seminar was a great time for thinking about the future of GraphNEX, in particular about our priorities before the end of the project in July 2024 and about the long-term support of the G-architecture and the G-interface.

A workshop on a common software architecture

The GraphNEx team gathered at the Idiap Research Institute in Martigny, Switzerland, surrounded by the beautiful Alps for an intense 2-day workshop with warm and sunny Spring weather of early May. The workshop was an opportunity for the team to meet up again in person, bond and strengthen previous relationships, and discuss the next steps of the project.

The focus was on progressing with a common software architecture for the two use-case: privacy protection and system genetics. The software should be flexible and modular in order to enable different users to train explainable predictive models on their own data.

During the workshop, the team updated the overall workflow that was agreed in the first in-person GraphNEx workshop and that consists of four modules: data, model, explainability and interface. Both model and explainability are complemented by their own evaluation toolkit, whereas the interface allows users and experts to visualise explanations on the browser.

From general discussions and presentations to focused sub-groups activities, the GraphNEx team defined the steps to create a repository for the development of the common architecture and interface. Here is a summary of the main decisions regarding the modules to be developed.

- Datasets commonly used by each research community, such as the Breast Cancer dataset from The Cancer Genome Atlas and the Image Privacy dataset were selected for prototyping and testing. The team will curate the datasets in the same format to be used by the models.

- Several models, such as graph convolutional networks and graph attention networks, were identified to be integrated in the common architecture. Additionally, the team discussed integrating simpler models as reference for comparing learning performance and explainability. Challenges exist to train and test the models on the previously mentioned datasets due to the different nature of the problems. For instance, separate pre-processing steps should be included to avoid end-to-end pipelines that would become specific for a use case, breaking the common and modular principles behind the workshop.

- For the interface, the team members agreed on the need to visualise the predictions and explanations of the models at different resolutions. In addition, discussions emerged on an interactive component that would allow the user to update the model / explanations in the back-end.

Stay tuned to know more about our upcoming repository with explainable models for privacy and system genetics!

View of the mountains in Martigny the day of the workshop

GraphNEx at the CHIST-ERA Projects Seminar 2023

This year the CHIST-ERA annual projects seminar was back in-person and held in Bratislava, Slovakia on 4-5 April. The event brought together researchers involved in the ongoing CHIST-ERA funded projects and representatives of their research funding organisations. CHIST-ERA Projects Seminar aims at strengthening the scientific communities working in the topics of the CHIST-ERA calls and supporting effort toward new collaborative and interdisciplinary research. The GraphNEx team members contributed to the event with three posters, a project presentation, flyers and a video demo.

The GraphNEx poster covered the objectives and challenges, the two use cases (System Genetics and Privacy Protection), the achievements, and the events organised to disseminate our works and to engage with the general public over the last year.

Denis and Basile (EPFL) presented the Explainable Value Proposition Canvas (xVPC) that originated from the GraphNEx workshop in 2022 and finalised in a peer-reviewed paper published in the IEEE TALE. xVPC borrows from the value proposition canvas broadly used by entrepreneurs and intrapreneurs to create new services and products. The GraphNEx canvas is applied for collaborative activities with blended design thinking in education. The canvas also supports the user-centred design of interfaces and helps the average citizen understand what private information they disclosed when sharing pictures online. To know more about our xVPC check out the poster here.

Myriam (ENSL) presented the limits of current explainability techniques for classification tasks on gene expression data. This is joint work with Anaïs and Maria (EPFL). Check out the poster for more details.

During the first day representative project members were split into parallel sessions per topic. In each session, representatives presented their projects and achievements, and Alessio (QMUL) presented the progress of GraphNEx. In the second part of the session, representatives discussed achievements, challenges, needs, open issues, open science, technology transfer, a possible roadmap, and the support of CHIST-ERA. During this part of the session, Myriam and Alessio volunteered to be involved as co-chairs to help preparing the joint topic presentations covering all the projects, and Myriam co-presented the topic during the second day in front of the representative of the projects from the other topics and the CHIST-ERA members.The CHIST-ERA Projects Seminar was a great opportunity for us to interact in person, to discuss current achievements, and plan collaborations. All of this without losing enjoyable walks and meals with traditional Slovakian food around the beautiful city centre of Bratislava and the stunning castle overlooking the Danube!

GraphNEx at the Victoria & Albert museum (London, UK)

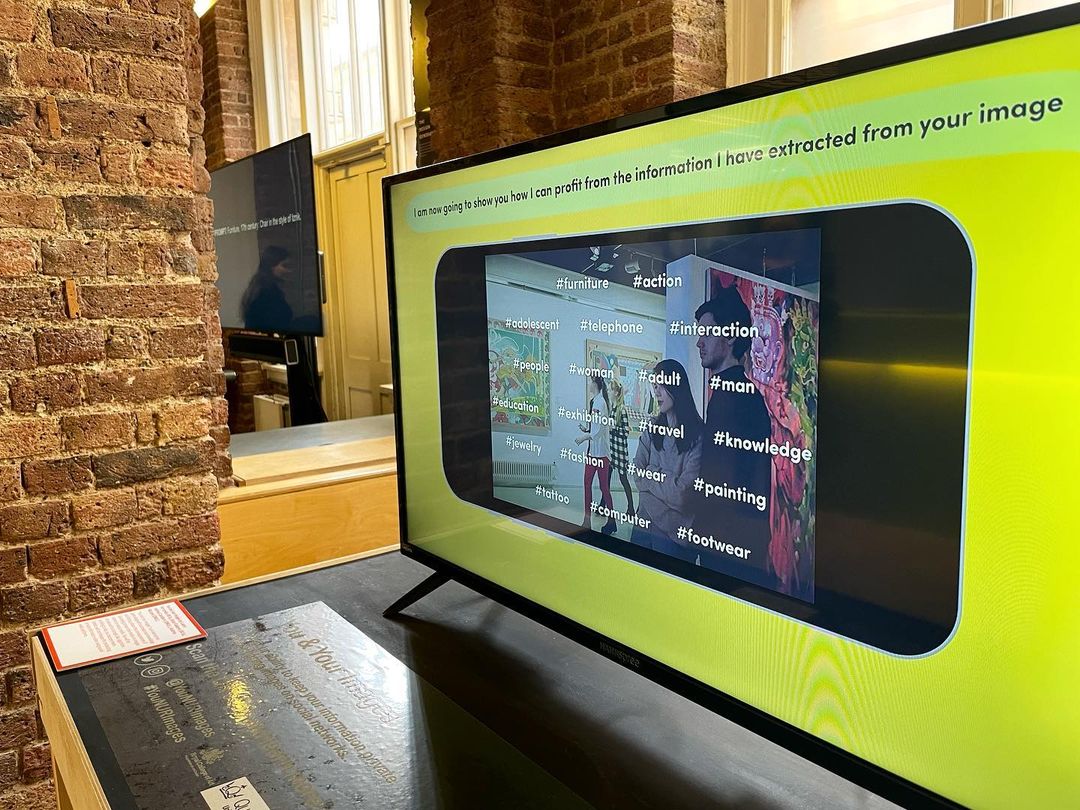

A GraphNEx demo on Privacy Awareness was presented at the Victoria and Albert museum during the Friday Late event on 23 September 2022 and the Digital Design Weekend on 24-25 September 2022. In both events, Prof Andrea Cavallaro and Dr Riccardo Mazzon (QMUL) in collaboartion with Prof Laura Ferrarello and Rute Fiadeiro (Royal College of Art, London) showcased “You and Your Images” an interactive activity designed to increase the knowledge and literacy about the consequences of disclosing private information in images shared on Social Media.

Both events were well attended by an estimation of over 2000 participants in total. Over 100 participants took part in the You and Your Images activity that involved going through a web experience where, after answering a set of questions, the participant had the possibility to upload an image, receive back a set of keywords describing the image and grasp how these information may be exploited by a service provider.

From the young professionals to the experts on data privacy, we received multiple positive feedback from the participants as the activity helped people to better understand how much information images are revealing and how, possibily, this information can be used.

You and Your Images website Friday Late Digital Design Weekend 2022

A workshop on eXplainable AI

Can we extrapolate semantic concepts and extract meaningful relationships that promote human interpretability from the current advances in explainable artificial intelligence (XAI)?

The GraphNEx team gathered on a three intense days' workshop (11-13 May 2022) near the beautiful lake Léman in Lausanne, Switzerland, to generate and share ideas around this question. The team focussed on i) defining explainability in terms of user expectations, ii) outlining a value proposition canvas (adapted from business terminology), iii) designing an explainability protocol to quantify the relevance of the explanations, and iv) reflecting on the added value of a-priori information.

The elements of an explainability protocol to quantify the relevance of the explanations were then defined. Explanations should be simple, stable and robust to missing data. They should also be domain appropriate and subject dependent (user-centred). A thorough analysis on the added value of a priori information, used to impose rules on the model and induce more constraints that are present in the data, was properly undertaken. The team noticeably enriched by the experience, used the final workshop session to construct the workflow of the project and set the main milestones to progress towards fulfilling users' expectations of XAI while planning for the next in-person meeting.

Welcome

Welcome to GraphNEx, a Chist-era project that focuses on creating a graph-based framework for developing inherently explainable AI. Our plan is to embed symbolic meaning within AI frameworks in order to overcome the complexity of current networks that learn high-dimensional, abstract representations of data. We will decompose blocks of highly connected layers of deep learning architectures into smaller units in order to adaptively evolve a graphical knowledge base.

We will employ Graph Neural Networks to extrapolate semantic definitions and meaningful relationships from sub-graphs (concepts) within a knowledge base that can be used for semantic reasoning, By integrating it with game-based user feedback, we will ensure explainability and relevancy to humans.

The GraphNEx framework will be validated within two scenarios: Clinical Genomics and Privacy in Activity Recognition. Within the Clinical Genomics space we will assist the discovery of concepts on large, multimodal biological datasets, while in the Privacy and Activity Recognition space, we will protect users from unwanted non-essential inferences in videos.