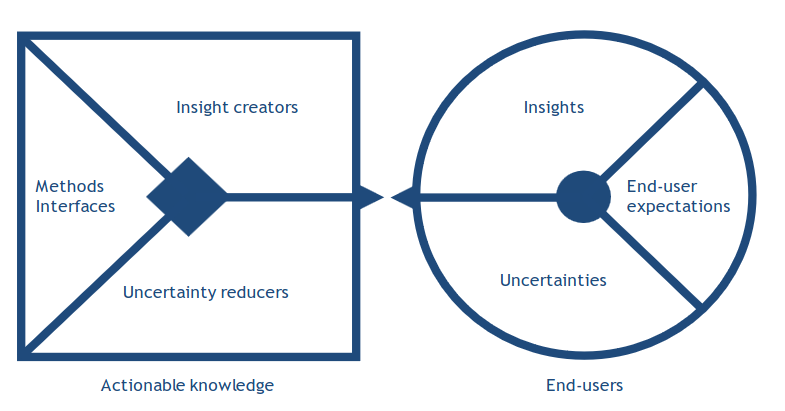

Explainability Value Proposition Canvas (xVPC)

A boundary object to facilitate the interaction between AI and HCI experts for designing user-centred XAI solutions.

xVPC borrows from the value proposition canvas broadly used by entrepreneurs to create new services and products.

The GraphNEx canvas is useful not only for research and innovation in XAI but also in computer and data science education for collaborative design thinking activities.

The canvas was used to support the user-centred design of interfaces and helps the average citizen understand what private information they disclosed when sharing pictures online.

Compared to the original VPC, xVPC highlights a) end-user expectations expressed in terms of insights to be created and uncertainties to be reduced thanks to the XAI solution being designed,

and b) actionable knowledge provided by the XAI solution through methods and interfaces targeting a predefined end-user segment,

such as AI experts, domain specific practitioners, or the general public.

[publication]

[more details]

Achievements

Methods

-

-

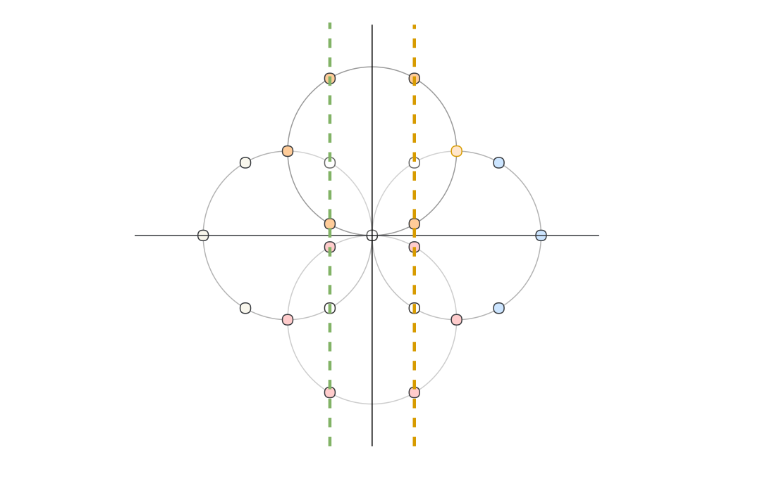

Novel harmonic analysis on directed graphs with random walk operator: From Fourier analysis to wavelets

Signals on (strongly) connected directed graphs are analysed with a novel harmonic method taking advantage of the random walk operator. Multi-scale analyses have been validated on semi-supervised learning problems and signal modelling problems.

[publication]

-

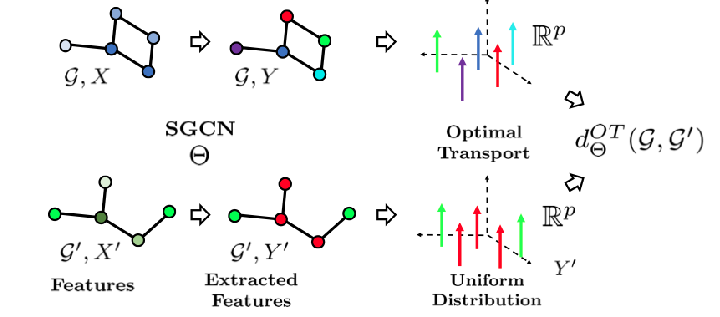

Simple way to learn metrics to compare signals on attributed graphs

A new Simple Graph Metric Learning (SGML) model, based on Simple Graph Convolutional Neural Networks (SGCN) and elements of Optimal Transport theory, that builds an appropriate distance from a database of labeled (attributed) graphs and improves the performance of simple classification algorithms such as k-NN. This model has few trainable parameters and the distance can be quickly trained while maintaining good performances.

[publication]

-

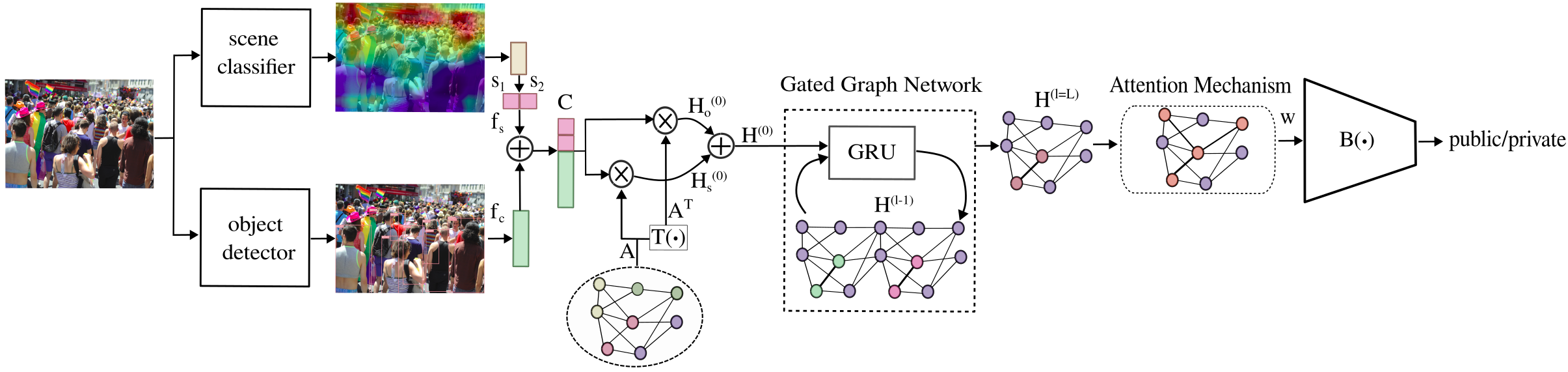

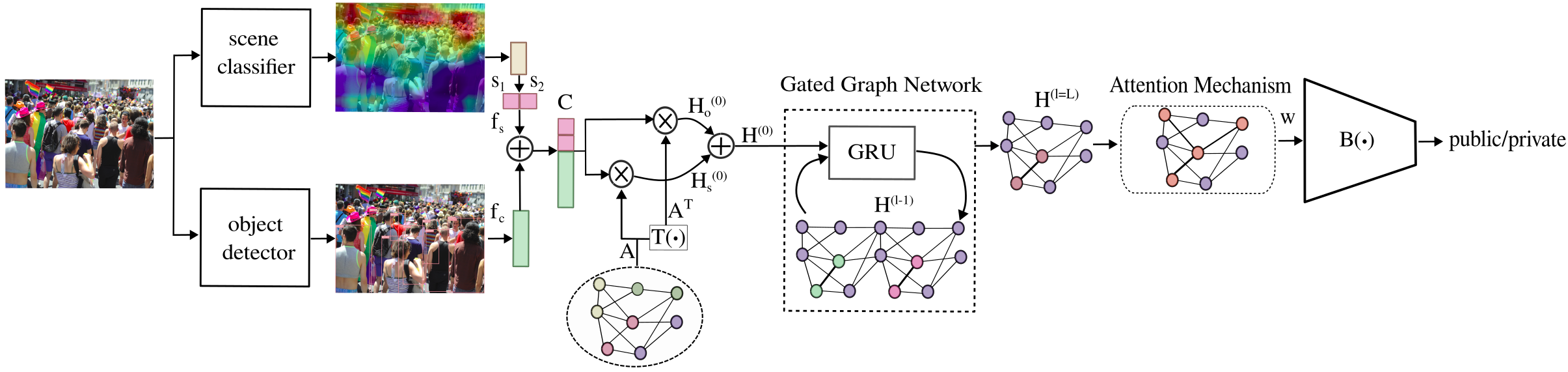

Graph Privacy Advisor (GPA)

A pipeline that uses existing convolutional neural networks to identify concepts (objects and scenes) and initialise their features in a graph (e.g., object cardinality); a graph neural network to update and propagate the features between nodes; and an MLP-based classifier to predict if an image is private or public. Partially building and improving on an existing method (GIP), GPA models the nodes as the object categories and two class nodes, and the edges as the binary co-occurrence of the nodes in at least one image of the training set. GPA outperforms the state-of-the-art GIP in terms of classification performance on three existing datasets for image privacy classification: PicAlert (+1.6 pp for Macro F1-score), PrivacyAlert (+5.3 pp for Macro F1-score), and Image Privacy Dataset (+4.3 pp for Macro F1-score). Compared to GIP, GPA has the advantage of using a small-size feature vector (2 elements) instead of a 4096-dimensional vector.

[publication]

-

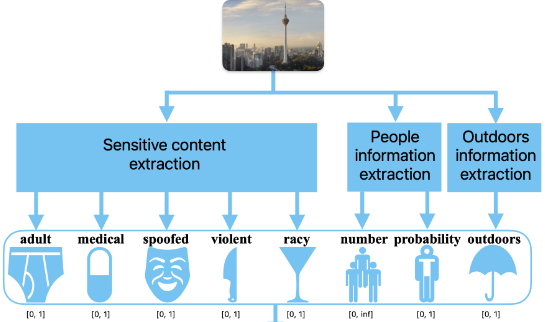

Human interpretable features for Privacy Protection

A set of human-interpretable features was defined and validated on two image privacy datasets: PicAlert and PrivacyAlert. Using these human interpretable features with classical machine learning algorithms, such as Logistic Regression and Multi-layer perceptron, achieves comparable performance (only 2.5 percentage points (pp) less) to using high-dimensional deep features extracted by recent convolutional neural networks (ResNet and ConvNext based models) or vision transformers (Swin models). Moreover, the use of these selected features together with deep features improves the classification performance by 1 pp.

[publication]

-

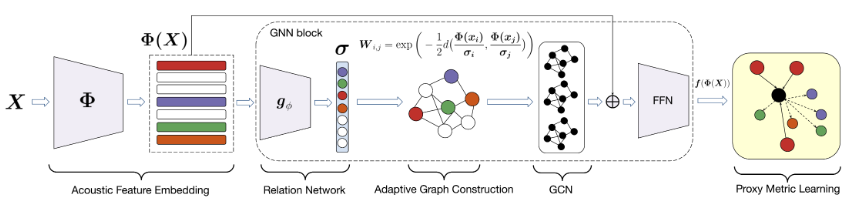

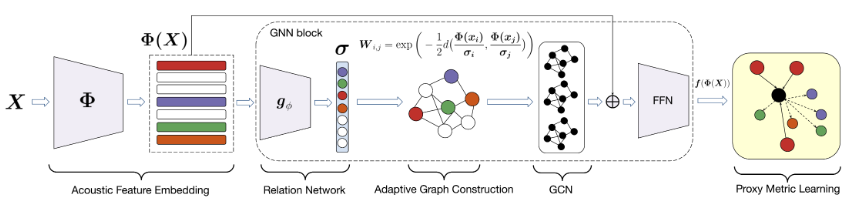

Adaptive Neighbourhood Graph Neural Network (AN-GNN)

A metric learning method that constructs and refines a dynamic graph neural network (AN-GNN) from acoustic features. AN-GNN achieves 96.4% retrieval accuracy compared to 38.5% with a Euclidean metric and 86.0% with a multilayer perceptron on the Cyberlioz dataset for music information retrieval. AN-GNN showed the benefits of using graph-based models in audio based tasks.

[publication]

-

New explainability performance measure

An explainability performance measure that estimates the minimal number of features necessary for a prediction when the explanations take the form of a list of features (nodes, groups of nodes) ranked in order of importance for the predictions.

[publication]

Software

-

Graph Privacy Advisor (GPA)

A pipeline for predicting if an image is private or public. GPA uses a scene classifier (a convolutional neural network pretrained on the Place365 dataset), a trainable fully connected layer that transforms the predicted scene logits to the class node features (logits), and an object detector (a YOLOv5 pre-trained on the COCO dataset with 80 object categories + background class) to localise objects in an image and counting their instances. The transformed scene information and cardinality of localised objects categories are used by GPA as visual clues for a Graph Neural Network (Gated graph neural network followed by a modified Graph Attention Network) to classify if an image is public or private. The software provides the training and testing codes of GPA on different public datasets (PicAlert, PrivacyAlert, Image Privacy Dataset), and a demo code to use GPA on images provided by the user.

[open-source code]

-

XAI for Genomics

A benchmark of different machine learning models on different real and simulated datasets to predict phenotype from gene expression data and evaluation of the explainability with different scores. The public repository includes 3 datasets with real samples from TCGA (PanCan, BRCA, KIRC) and 5 datasets with simulated data (SIMU1, SIMU2, SimuA,SimuB, SimuC); 4 machine learning models: logistic regression (LR), multilayer perceptron (MLP), diffusion + logistic regression (DiffuseLR), diffusion + multilayer perceptron (DiffuseMLP); correlation graphs over all features using training examples; and 4 explainability performance measures (prediction gaps in descending order of importance (PGI) and in ascending order of importance (PGU) or in random order (PGR), and feature agreements (FA)). Comparative results in terms of classification accuracy and explainability across all models and datasets are provided for reproducibility.

[open-source code]

-

Adaptive Neighborhood Graph Neural Network

Public release of the open-source code for the AN-GNN for music retrieval based on human similarity judgements. PSGNN uses a pre-trained network (Open L3 model trained on both video & audio data from Audioset) to extract embeddings from given audio files and constructs a graph from the embeddings in a batch based on a novel adaptation of Gaussian RBF kernel. The node embeddings are further refined using a stack of graph convolution network and then clustered appropriately through a proxy anchor loss objective. The model trains to predict the cluster label (19 clusters) for each input audio file and achieves a 96.4% accuracy on the task.

[open-source code]

Interfaces

-

You and Your Images

In collaboration with the Royal College Academy (UK), “You and your images” engages the community on privacy risks when uploading an image online and increase people’s awareness. After answering a set of questions, people can upload an image, receive back a set of keywords describing the image and grasp how this information may be exploited by a service provider. A demo of the interactive website was presented at the Victoria and Albert (V&A) museum in London, during the Friday Late event on 23 September 2022 and the Digital Design Weekend on 24-25 Sept 2022. Both events were well attended by an estimation of over 2,000 participants, and over 100 participants from young professionals to experts on data privacy took part in the "You and Your Images" activity. Participants positively commented on the activity as “You and your images” helped better understand how much information images are revealing and how, possibly, this information can be used.

[web interface]

-

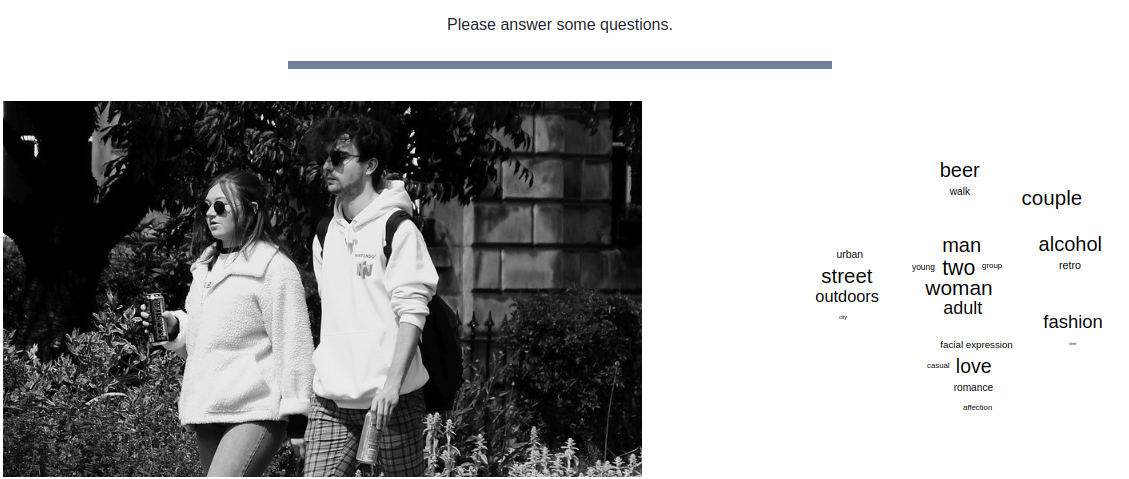

Privacy Advisor for XAI: User study and word-cloud visualisation

An interface that visualises a word cloud of concepts predicted by a general-purpose classifier to a naive user given an input image. The interface was used for a user study to assess the privacy degree of an image (privacy awareness) and user satisfaction (quality and utility of the interface) on the usefulness of the word cloud when reviewing the initial evaluation. The results of this study suggest that using a word cloud to display keywords representing privacy concepts present in the input image could make users more aware of an image’s potentially private nature.

[web interface]

-

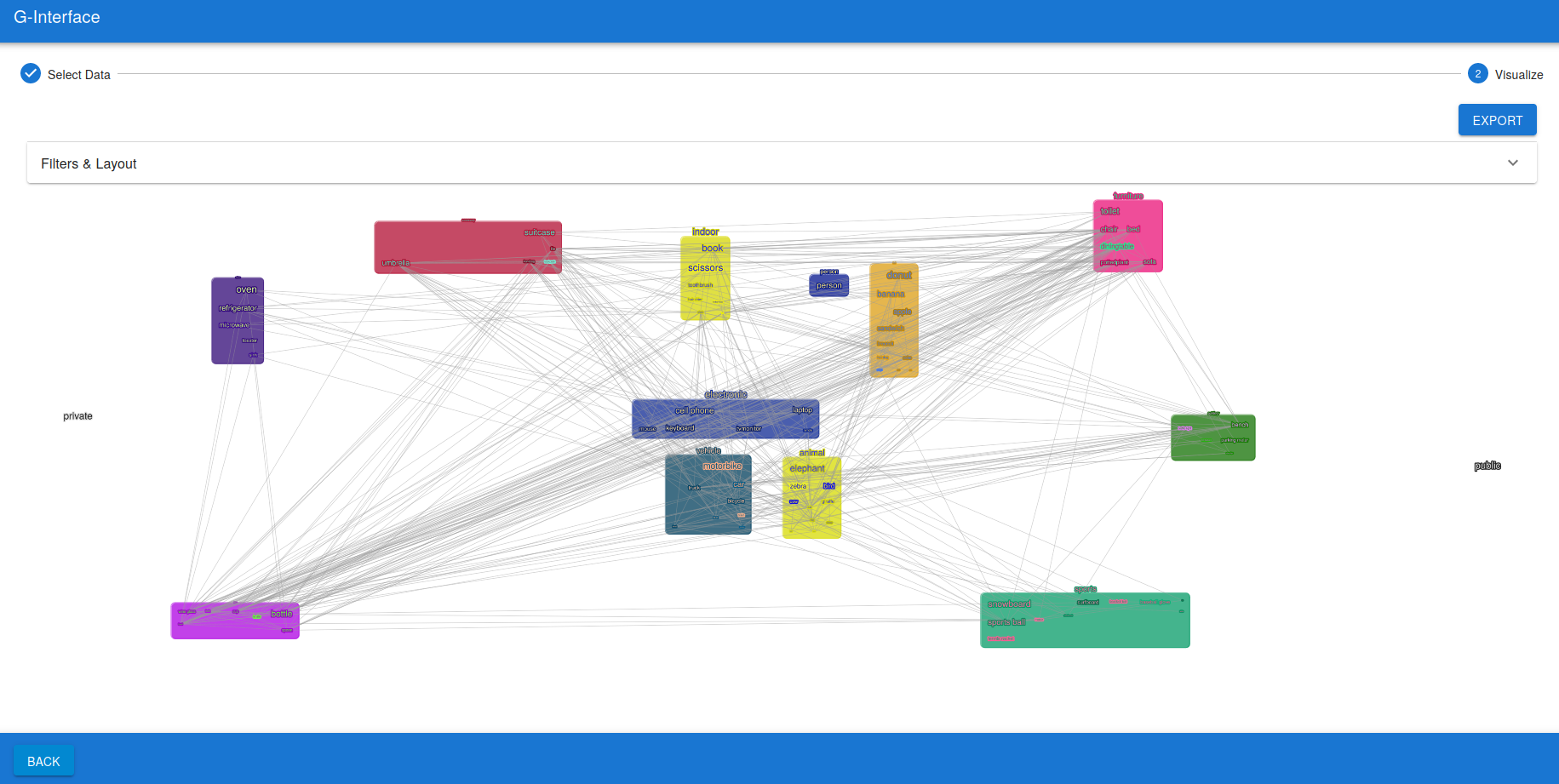

G-Interface: Visualising and manipulating prior knowledge graphs for Privacy Protection and System Genetics

G-Interface, based on the Cytoscape.js library, visualizes knowledge graph data related to models developed within GraphNEx for the Privacy-Protection and System Genetics use-cases. G-Interface provides a menu to filter and manipulate the graph, enabling model refinement. The software, or part of it (e.g., word clouds, layouts), can be integrated into other platforms, projects, and experiments.

[web interface] [open-source code]

Other

-

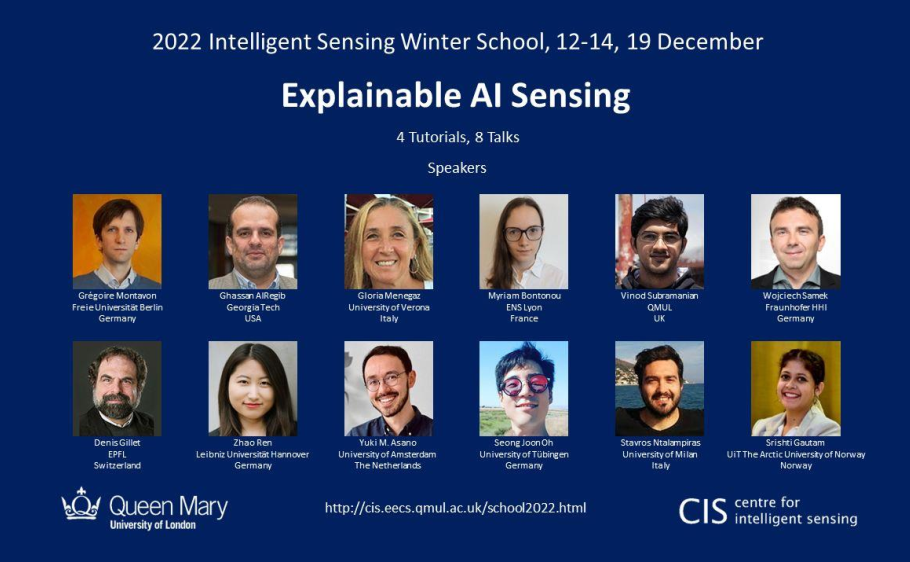

The 2022 Intelligent Sensing Winter School on XAI Sensing

Organised in collaboration with the Centre for Intelligent Sensing at Queen Mary University of London, the Winter School hosted 4 tutorials and 8 talks from international experts presenting their research related to Explainable Artificial Intelligence (XAI) and Interpretable Machine Learning. The audience was also invited to submit an expression of interest for a short (5-10 minutes) presentation related to their work, and 6 people presented a short talk on the last day. The event received more than 600 registrations and peaks of attendance of around 200 participants.

[webpage]